This post tackles how to investigate malware persistence during incident response.

We’re on a roll on this Intro to Incident Response series after its 2-year vacation. We’re up to posting #6, and we transition from a 3-part “sub-series” on Users to a 3-part “sub-series” on malware. Part 1 of this sub-series is about finding malware based on how it starts and its persistence mechanism.

We’ll start with a refresher on the series, dive into the topic of starting malware and persistence, and then show some examples using Cyber Triage.

Refresher on Approach

Because this series is as much about an investigation framework as it is about specific techniques, let’s make sure everyone is on the same page about our approach.

Earlier posts in the series covered it in more detail, but the basic concept is that we are all about breaking big intrusion investigation questions into smaller questions that can be answered based on data. There are three big questions under the question of “Is this computer compromised?”

- Is there suspicious user activity (such as sensitive files being accessed)?

- Are there malicious programs (such as malware)?

- Are there malicious system changes (such as relaxed security settings)?

The last three posts were about the first question, users. Now, we start to focus on the second question, malware.

Why We Investigate Malware in Incident Response

Investigating malware is a broad topic and means different things to different people. For some reverse engineering people, it’s all about finding out exactly what a specific piece of malware does. For most people though, it’s about identifying that it is there.

Malware is often used at some point in an attack. Such as:

- A malicious attachment that is sent as part of a phishing campaign

- A keystroke logger that is run after an attacker gains access to a computer so that they can get passwords

- A Remote Access Tool (RAT) that performs operations based on messages from a command and control (C&C) server operated by the attacker.

So, as part of a thorough investigation, we need to look for evidence of malware.

As we noted in the series on investigating users, not every compromised computer will have malware. Once a user account has been compromised, the attacker may be able to operate without malware. So, a full investigation should be looking for both malware and user activity.

Malware comes in both commodity and advanced (i.e. APT) forms. The same concepts apply to both, but the difference is that the advanced forms do a much better job at blending in and are harder to detect.

Breaking “Malware” into Smaller Questions

Remember that our approach here is to break big questions into smaller questions that we know how to find data for. We are going to break our question of “Are there malicious programs on this computer?” into three smaller questions:

- Are there suspicious programs that start based on some trigger (such as when the computer starts, a user logins, scheduled task, etc.)?

- Are there suspicious processes currently running?

- Are there remnants of past executions of known malicious programs?

In other words, we’re going to look at what programs get started, what programs are currently running, and known traces we can find.

This post will focus on finding malware when it starts.

Why Incident Responders Investigate Malware Starting and Persistence

A malicious executable file won’t cause any damage until the commands in it are executed. So, it’s in an attacker’s best interest to make sure their malware runs and persists across reboots. Now, not all malware wants to persist, but we’ll deal with that in the next part of the series.

From a triage perspective, a huge reason for focusing on triggers is because there are a lot of files and programs on a computer. We can reduce the number of possible suspects by initially focusing on those that are associated with some form of trigger.

Let’s consider some scenarios:

- A malicious executable program is added to the “RunOnce” registry key in a user’s NTUSER.DAT registry hive. The program is run each time the user logs in

- Malicious Powershell script is added to the “RunOnce” key and that is executed each time the user logs in. There is no malicious executable file, in this case, just the Powershell script in the registry key. This is called “fileless malware”

- A malicious program is added as a Windows service and is executed each time the computer starts

- A malicious program is added as an action to a Scheduled Task and runs every 2 hours to reach out to a command and control server

- A scheduled task is created that causes a malicious program to get copied and executed on a remote computer every 2 hours.

- A Windows Management Instrumentation (WMI) action is created that causes a program (or script) to execute based on some other WMI-based trigger.

What Kinds Of Malware Persistence Should Incident Response Professionals Know?

Attackers are finding new ways of triggering malware all the time, and there are dozens of ways they can persist in a computer or network.

But, they broadly fit into:

- When the computer starts

- When a user logs in

- Based on some time-based schedule

- Piggybacking on the execution of a legitimate application by either launching malware instead of the intended application or injecting dlls when the legitimate application launches

- Basically, any event that occurs in Windows that WMI can monitor.

Malware Persistence Trigger Locations Incident Responders Should Know

Given that there are so many ways to make triggers, I’m not going to make an exhaustive list of Windows locations because it would take pages, and no one would fully read it.

It’s the job of your DFIR software to know about every location.

But, you should know about the major locations:

- Registry: There are dozens of registry keys that can be used for persistence

- Many are commonly referred to as the “autoruns” locations because the Microsoft “autoruns” program is an authoritative source for all of the locations

- This is also the place that you’ll find “Hot Keys” that allow attackers to get a command prompt when they type in certain key combinations on a remote desktop login prompt

- You’ll also find clues here about what programs actually get launched. An old school approach is to force malware to initially launch instead of, for example, Microsoft Word. The malware will eventually launch Word, but only after starting its malicious code

- Startup Folder: Each user has a folder that contains links or executables that are run each time they log in

- Scheduled Tasks: These run on either a time-based schedule or user logins. They can run one or more programs at a time. A typical computer already has many of these to perform routine tasks

- WMI Actions: WMI was designed to help system administrators automate their tasks. WMI Actions can be triggered based on a schedule, login, or lots of other events. WMI is often used in conjunction with “file-less” persistence and uses PowerShell scripts to do nefarious things

- Windows Loading Path: Techniques such as “dll injection” work by tricking a legitimate application to load a malicious library. Attackers achieve this by modifying the PATH environment variable, putting their library early in the path, or adding their library to the same folder as the target application.

This data is primarily stored on the computer’s disk, but can also be found from a memory capture.

How To Analyze The Triggered Programs

All of the above triggers are used for normal legitimate uses, so their existence alone is not usually suspicious. It’s really about the program or script that gets executed.

Let’s look at how we can analyze those programs:

- File Path: The location of the program may cause it to be suspicious. Some malware variants like to use folders like AppData or Temp, which is not what traditional startup programs use. Though some auto-update programs that periodically reach out to look for new versions of a program do exist in AppData, so this technique alone is not enough to identify a program as malicious

- Times: If you have a time frame that you think the incident occurred in, you can use the file and registry timestamps to detect suspicious items. The attacker may have modified some of the file timestamps to blend in (some programs will timestamp with the same times as other files in the same folder), but with NTFS-based files, you can always look at the timestamps in the $STANDARD_INFORMATION attribute

- Cryptographic Signature: Many legitimate programs are signed by the vendor. Some malware is not. You can use the lack of a signature as a method of identifying suspicious startup items. But, there is malware out there that used a stolen certificate to sign itself

- Occurrence on Other Systems: Many corporate environments have similar configurations for each endpoint. You can compare the triggers of the target system with a baseline or other systems to determine if it has unique entries

- Blacklists/Threat Intelligence: The file’s MD5 or SHA256 cryptographic hash value could match some previously seen malware. To perform this kind of analysis, you need access to a threat intelligence feed or one or more anti-virus products

- Static Analysis: Some attackers modify malware ever so slightly for each victim so that the cryptographic hashes change. So, you should also use static analysis techniques by looking at other signatures in the program. You can do this with Yara rules or anti-virus products. You may need to unpack files that have been obfuscated to do this

- Dynamic Analysis: The 2nd most time-intensive process is to perform dynamic analysis, which involves running the program in some form of safe, sandboxed environment and monitoring what it does. This is needed when the program has not been seen before, but some malicious programs can detect dynamic analysis and stay on their best behavior. You may also need to let it run for a while before it performs a task

- Reverse Engineering: By far the most time-intensive and challenging process is manual reverse engineering. This involves one or more skilled people who use dynamic analysis and decompile the code to figure out what a program can do. This is beyond triage and the capabilities of most incident response teams.

Many incident response teams rely heavily on antivirus products to detect malware and services that integration many AV products provide a broad perspective on files. That is useful to detect new malware. Examples include Reversing Labs, Virus Total, Polyswarm, and OPSWAT.

Finding Malware Persistence with Incident Response Software Cyber Triage

Cyber Triage collects and analyzes artifacts associated with malware persistence, and we’ll show you how. If you’d like to follow along, download a free evaluation copy of Cyber Triage before reading on.

The file system data needed to detect the persistence is collected by one of our agentless collection methods. Our collection tool will use The Sleuth Kit to locate registry hives (even when they are locked), load the hive using our open-source library, and parse the numerous keys and values. The associated executables are then located (again via The Sleuth Kit) and bundled up for later analysis. It will also locate Scheduled Task configuration files, WMI databases (in the forthcoming 2.9 release), and more.

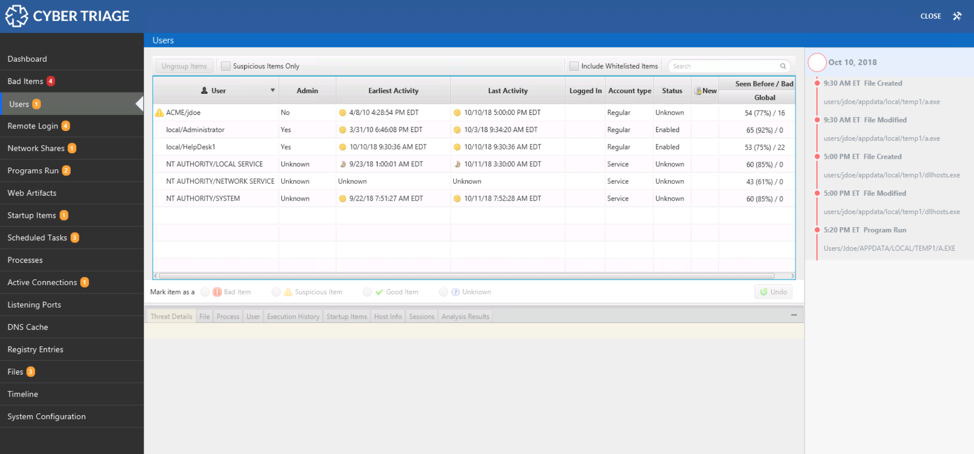

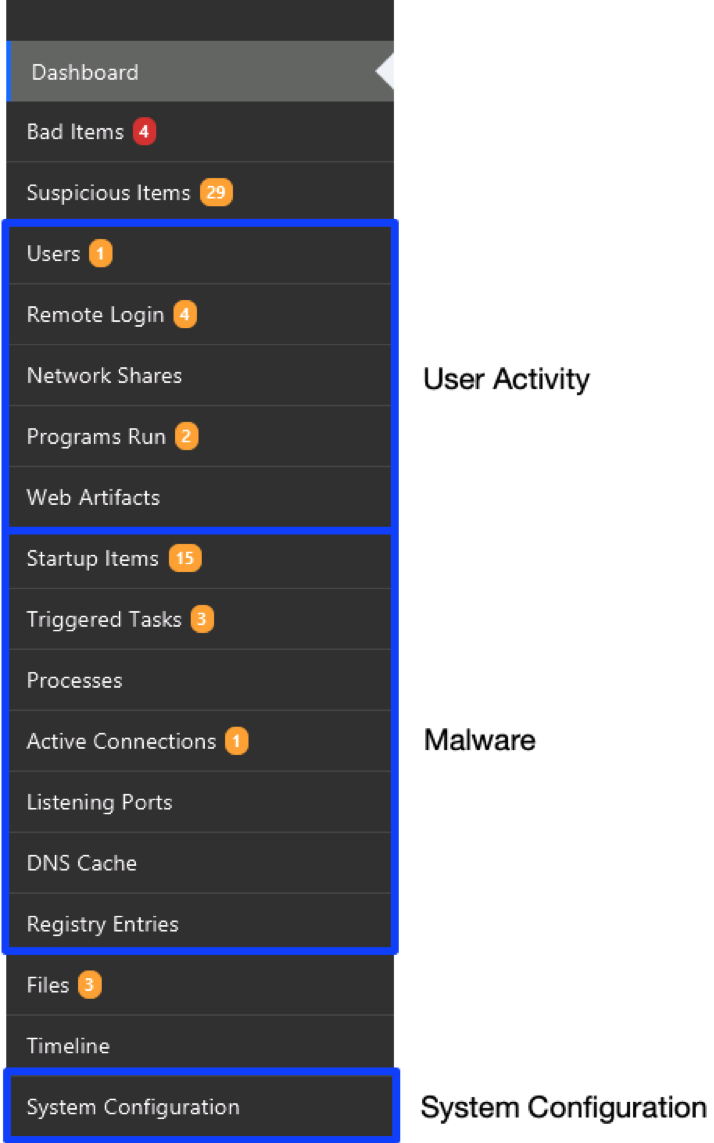

The navigation menus on the left-hand side of Cyber Triage are organized based on the same ideas that this blog series uses. The first items are all about users. The next set is about malware. The last is about system configuration.

For the persistence-based questions, we’re going to focus on the first two items in the malware section, which are “Startup Items” and “Triggered Tasks.”

Startup Items Section

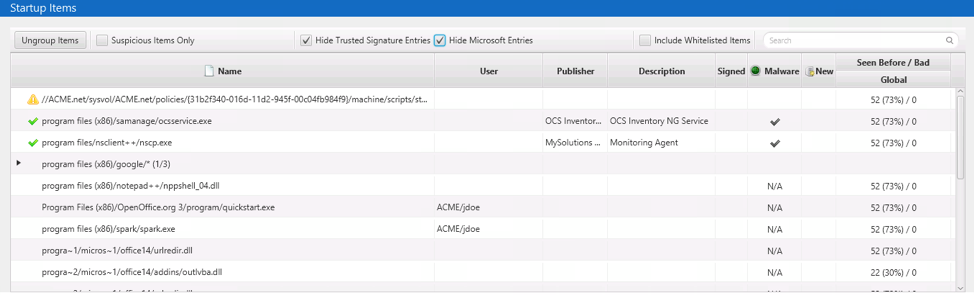

The “Startup Items” section contains the programs and items from the dozens of “Autoruns” locations that are triggered based on computer startup or user logins.

Each of these locations has its own way of storing the startup information, and Cyber Triage normalizes them to a core set of data so that you can review them without needing to know the details of each.

The items are grouped based on the parent folder to make it easier to review and focus on anomalous locations:

To reduce the amount of data you initially look at, programs signed by Microsoft and known authorities are hidden (unless they get scored as suspicious by the various content-based analysis techniques).

When the collected data is imported into Cyber Triage, it will perform various analysis techniques and assign scores. Those are outlined in a subsequent section.

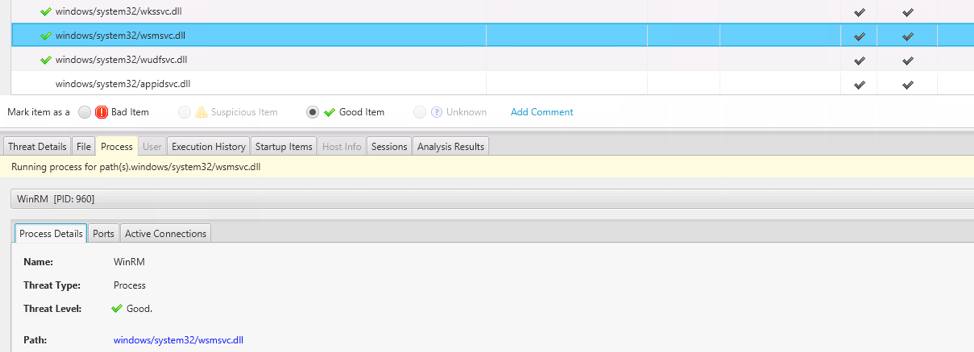

The bottom set of tabs allow you to get more context about the program, such as:

- File metadata for the program being run

- Malware scan results for the file, PE headers, strings, etc.

- If the program is currently running and, if so, any network connections associated with it

- If there is evidence that it was run and when

- If other computers have the same item or if this is unique.

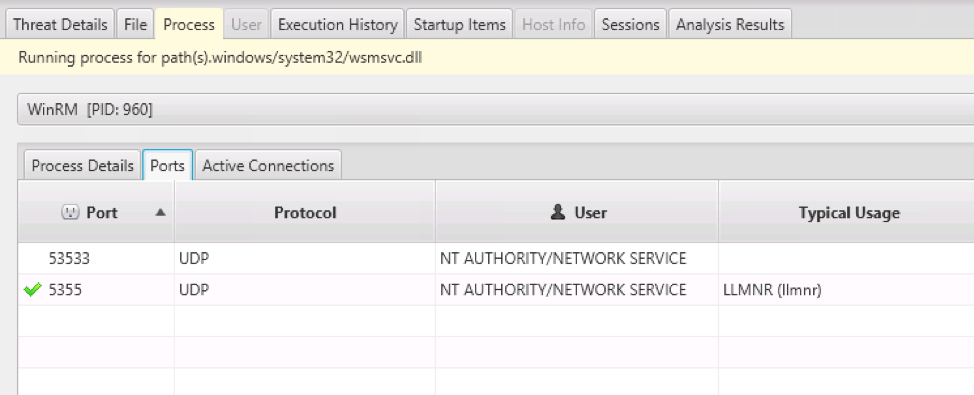

As a non-malicious example, if we look at the entry for the WinRM Service (which gets started each time Windows starts), we can see that it was still running when the capture was performed…

…and had two UDP ports open:

As with all other data types in Cyber Triage, you can mark an item as “Bad,” “Suspicious,” or “Good” so that it can be reported or followed up on.

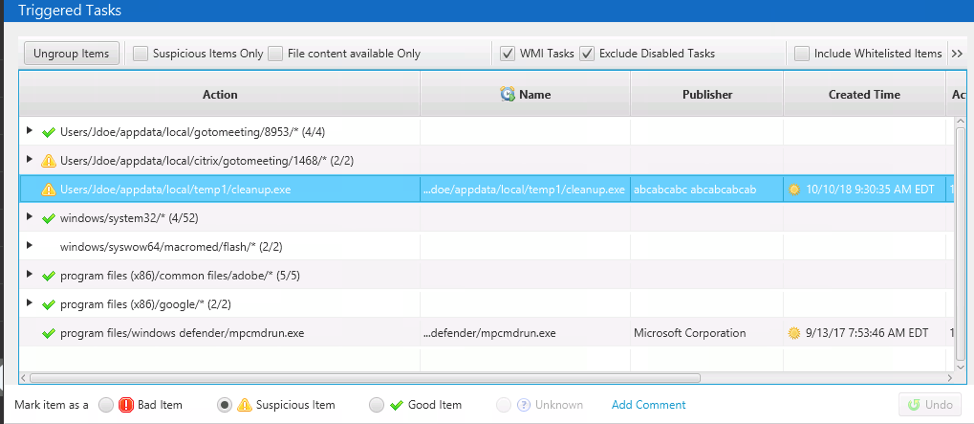

Triggered Tasks Area

The “Triggered Tasks” area contains items from Scheduled Tasks and WMI Actions. These are persistence techniques that operate based on things besides only the computer starting or users logging in.

Like the “Startup Items” section, the items here are grouped based on parent path and undergo analysis based on the programs that are executed (which are outlined in the next section).

Like the “Startup Items” section, the items here are grouped based on parent path and undergo analysis based on the programs that are executed (which are outlined in the next section).

From this area, you can see on the bottom set of tabs if the program is still running and how often it was run. You can also mark the item as “Bad,” “Suspicious,” or “Good.”

NOTE: In the 2.8 and earlier releases, this section was called “Scheduled Tasks.”

Analysis Techniques for Discovering Malware Persistence During Incident Response

Regardless of the specific type of trigger, a variety of analysis techniques are performed on the program or script.

These include:

- Analysis of the program path, and if it is in an atypical location

- Uploading of the file to ReversingLabs so that it can be analyzed by 40+ analysis engines

- Scan by user-supplied Yara rules

- Lookup of the file’s MD5 hash value in both the NIST NSRL and any blacklist hash sets from threat intelligence

- Validation of the cryptographic signature on the file

- Other special features associated with malware, such as funny characters or long registry keys.

The broad set of collection locations and analysis techniques allow Cyber Triage users to quickly review a large number of persistence mechanisms.

Conclusion

Malware analysis is critical to incident response, and one approach is to look for persistence mechanisms. There are dozens of places to look and automation is critical to ensure full coverage.

If you’d like to start testing Cyber Triage on some of these tasks, download your evaluation copy today.