We often get asked how our Collector is different from others and we’ve been forced to start making some definitions. I started to call our collector an adaptive file collector. Never heard that phrase? Well, then read on.

This post is about static vs adaptive collections and how adaptive will resolve paths within artifacts and bring back more data for you to analyze. You should be using an adaptive collector if you want to maximize the amount of relevant data you bring back in the shortest amount of time.

Goals of Not Making a Full Image

This post is all about situations when you are not going to make a full image of a disk. This often happens because:

- You don’t have time to copy it all

- You don’t have the authority to access all data

- You don’t want a small subset of the total data set

Your ultimate goal is to get all of the relevant data in as little time as possible.

Static Collectors and Scanners

If your goal is to copy less data than everything, then you’ll need some kind of rules to decide what to bring back or not.

There have traditionally been a few categories of collection tools:

- IOC Scanners: These generate alerts if any file exists that matches rules based on threat intelligence. Example rules include file hashes, names, or Yara rules. The goal of these tools is less about preserving the system and more about knowing how to prioritize the system for a more complete collection.

- Source File Collectors: These copy files based on a set of file metadata rules, such as full file paths, extensions, and sizes. Example rules include copying registry hives and event logs based on their path. Or copying all JPG files within a certain date range.

- Artifact Collectors: These focus on artifacts instead of files. They copy the attributes of system artifacts, such as running processes, network connections, and logged in users. Because these data sets are often small, then no additional rules or filtering is needed. All data is copied.

I started to use the term ‘static’ to describe these approaches because they start with a static set of rules when the collection starts and that is what gets alerted on or copied. The user may be able to customize the rules before the collection starts, but it is fixed after that.

Common examples we often come across include:

- Thor Lite – A free IOC scanner

- KAPE – A free static source file and artifact collector that can be customized

- FTK Imager – Can create a full disk image or copy files that meet certain rules

- SysInternals – A set of tools from Microsoft that will copy artifacts

Downsides of Static File Collections

The primary downside of a static file collection tool is that you won’t often upfront know all of the rules needed to copy the relevant data. Each system is different.

Therefore, you’ll either end up missing relevant data or you’ll have to make rules that are so broad that you collect much more than you need.

For example, for an intrusion investigation you may either have a:

- Narrow focus and copy only registry hives, event logs, and other databases. But miss the scripts, executables, or other files the attacker put on the system.

- Broad focus and copy all files created within the past 30 days. The 30-day time window may not actually be long enough for the attack and may end up including a lot of non-relevant files that were recently created as part of an application update.

Adaptive Collectors

An adaptive collector starts off with a set of rules, but will also parse files and artifacts to identify additional files to collect. It adapts to the system.

If it finds a file path in an artifact, it will copy that file, and parse it too. It will collect all of the executables associated with scheduled tasks, startup items, or downloads.

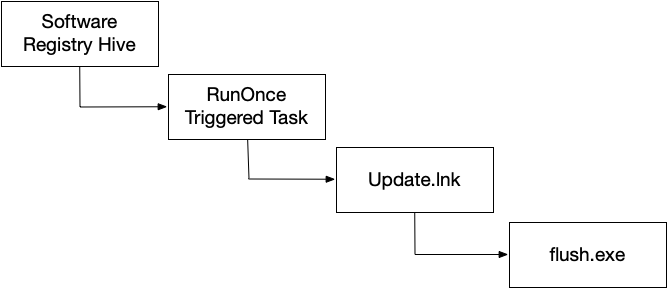

Adaptive collections can go through several layers of recursion. For example, it could:

- Find the SOFTWARE registry hive (C:\Windows\System32\config\SOFTWARE) based on its path and collect it

- Parse the registry and focus on the RunOne key (amongst others)

- Identify the C:\Users\Jdoe\AppData\Local\Temp\update.lnk file in RunOnce. This file would be run each time the computer starts.

- Find the update.lnk file based on its path and collect it

- Parse the Lnk file and identify it has a reference to C:\ProgramData\Microsoft\Windows\Caches\flush.exe

- Find the flush.exe file and collect it

The adaptive approach resulted in the LNK and EXE file being copied that a static collector would have missed. And those files are going to be what the investigator needs to determine if there is malicious activity or not.

| Static Collection | Adaptive Collection |

| C:\Windows\System32\config\SOFTWARE | C:\Windows\System32\config\SOFTWARE |

| C:\Users\Jdoe\AppData\Local\Temp\update.lnk | |

| C:\ProgramData\Microsoft\Windows\Caches\flush.exe |

Benefits of Adaptive Collections

The primary benefit of an adaptive collection is that you get a more complete collection without overly broad rules that result in very large collections.

You’ll never know all of the items that will be relevant ahead of time and an adaptive collection will increase your chances of getting as much as possible on the first attempt.

Cyber Triage Collector is Adaptive

The Cyber Triage Collector has always been an adaptive collection tool (we just didn’t initially call it that). It parses artifacts on the system and makes copies of the items the artifact refers to, including:

- “Autoruns” registry keys

- Scheduled tasks

- WMI Actions

- Bits Jobs

- MRU keys

- Startup folders

- Prefetch files

- …

In addition, it will:

- Parse LNK files and resolve what their target is

- Parse commands to rundll and resolve the DLL argument

- … and other techniques we’ve learned over the years to focus on the relevant data

Our goal is to make sure your collection is complete and fast as possible and we use adaptive approaches to ensure that.

Cyber Triage Collector Basics

The Cyber Triage Collector is a separate program from the main Cyber Triage application. It’s available in both the free and paid versions. The Collector runs on the target live system and copies the data needed by the main Cyber Triage application.

More details can be found here, but the key concepts are:

- It’s a single Windows executable, which makes it easy to deploy and move around.

- It doesn’t need to be installed. It just needs to be copied to a computer and launched via Powershell, EDR, or manually.

- It’s output is a JSON file that contains source files and artifacts

- The output can be sent up to cloud storage (S3 or Azure), the Cyber Triage server, or to a local file.

The most simple way to run it is:

- Extract it from the Cyber Triage application

- Copy it to the target system

- Right click on it and choose “Run as administrator”

- The results will be saved to a JSON file in the current folder

For different behavior, supply it with command line arguments or use the GUI wrapper. You can find out more details in the user manual.

Adaptive Collections are Key

If your goal is to get the relevant data as quickly as possible, then you need an adaptive collection tool. If you don’t have one, you can try out Cyber Triage using the free 7-day evaluation. Fill out this form, download the software, extract the collector and run it on a test system.